Deploy a Service

Welcome to the Holos Deploy a Service guide. In this guide we'll explore how Holos helps the platform team work efficiently with a migration team at the fictional Bank of Holos. The migration team is responsible for migrating a service from an acquired company onto the bank's platform. The platform team supports the migration team by providing safe and consistent methods to add the service to the bank's platform.

We'll build on the concepts we learned in the Quickstart guide and explore how the migration team safely integrates a Helm chart from the acquired company into the bank's platform. We'll also explore how the platform team uses Holos and CUE to define consistent and safe structures for Namespaces, AppProjects, and HTTPRoutes for the benefit of other teams. The migration team uses these structures to integrate the Helm chart into the bank's platform safely and consistently in a self-service way, without filing tickets or interrupting the platform team.

What you'll need

Like our other guides, this guide is intended to be useful without needing to run the commands. If you'd like to render the platform and apply the manifests to a real Cluster, complete the Local Cluster Guide before this guide.

This guide relies on the concepts we covered in the Quickstart guide.

You'll need the following tools installed to run the commands in this guide.

- holos - to build the Platform.

- helm - to render Holos Components that wrap Helm charts.

- kubectl - to render Holos Components that render with Kustomize.

Fork the Git Repository

If you haven't already done so, fork the Bank of Holos then clone the repository to your local machine.

- Command

- Output

# Change YourName

git clone https://github.com/YourName/bank-of-holos

cd bank-of-holos

Cloning into 'bank-of-holos'...

remote: Enumerating objects: 1177, done.

remote: Counting objects: 100% (1177/1177), done.

remote: Compressing objects: 100% (558/558), done.

remote: Total 1177 (delta 394), reused 1084 (delta 303), pack-reused 0 (from 0)

Receiving objects: 100% (1177/1177), 2.89 MiB | 6.07 MiB/s, done.

Resolving deltas: 100% (394/394), done.

Run the rest of the commands in this guide from the root of the repository.

Component Kinds

As we explored in the Quickstart guide, the Bank of Holos is organized as a collection of software components. There are three kinds of components we work with day to day:

- Helm wraps a Helm chart.

- Kubernetes uses CUE to produce Kubernetes resources.

- Kustomize wraps a Kustomize Kustomization base.

Holos offers common functionality to every kind of component. We can:

- Mix-in additional resources. For example, an ExternalSecret to fetch a Secret for a Helm or Kustomize component.

- Write resource files to accompany the rendered kubernetes manifest. For example, an ArgoCD Application or Flux Kustomization.

- Post-process the rendered manifest with Kustomize, for example to consistently add common labels.

ComponentConfig in the Author API describes the fields common to all kinds of components.

We'll start with a Helm component to deploy the service, then compare it to a Kubernetes component that deploys the same service.

Namespaces

Let's imagine the Bank of Holos is working on a project named migration to

migrate services from a smaller company they've acquired onto the Bank of Holos

platform. One of the teams at the bank will own this project during the

migration. Once migrated, a second team will take over ownership and

maintenance.

When we start a new project, one of the first things we need is a Kubernetes Namespace so we have a place to deploy the service, set security policies, and keep track of resource usage with labels.

The platform team owns the namespaces component that manages these Namespace

resources. The bank uses ArgoCD to deploy services with GitOps, so the

migration team also needs an ArgoCD AppProject managed for them.

The platform team makes it easy for other teams to register the Namespaces and

AppProjects they need by adding a new file to the repository in a self-service

way. Imagine we're on the software development team performing the migration.

First, we'll create projects/migration.cue to configure the Namespace and

Project we need.

- projects/migration.cue

package holos

// Platform wide definitions

_Migration: Namespace: "migration"

// Register namespaces

_Namespaces: (_Migration.Namespace): _

// Register projects

_AppProjects: migration: _

Each of the highlighted lines has a specific purpose.

- Line 4 defines the

_Migrationhidden field. The team owning the migration project manages this struct. - Line 7 registers the namespace with the

namespacescomponent owned by the platform team. The_value indicates the value is defined elsewhere in CUE. In this case, the platform team defines what a Namespace is. - Line 10 registers the project similar to the namespace. The platform team is responsible for defining the value of an ArgoCD AppProject resource.

Render the platform to see how adding this file changes the platform as a whole.

- Command

- Output

holos render platform ./platform

rendered bank-ledger-db for cluster workload in 161.917541ms

rendered bank-accounts-db for cluster workload in 168.719625ms

rendered bank-ledger-writer for cluster workload in 168.695167ms

rendered bank-balance-reader for cluster workload in 172.392375ms

rendered bank-userservice for cluster workload in 173.080583ms

rendered bank-backend-config for cluster workload in 185.272458ms

rendered bank-secrets for cluster workload in 206.420583ms

rendered gateway for cluster workload in 123.106458ms

rendered bank-frontend for cluster workload in 302.898167ms

rendered httproutes for cluster workload in 142.201625ms

rendered bank-transaction-history for cluster workload in 161.31575ms

rendered bank-contacts for cluster workload in 156.310875ms

rendered app-projects for cluster workload in 108.839666ms

rendered ztunnel for cluster workload in 138.403375ms

rendered cni for cluster workload in 231.033083ms

rendered cert-manager for cluster workload in 172.654958ms

rendered external-secrets for cluster workload in 132.204292ms

rendered local-ca for cluster workload in 94.759042ms

rendered istiod for cluster workload in 420.427834ms

rendered argocd for cluster workload in 298.130334ms

rendered gateway-api for cluster workload in 226.0205ms

rendered namespaces for cluster workload in 110.922792ms

rendered base for cluster workload in 537.713667ms

rendered external-secrets-crds for cluster workload in 606.543875ms

rendered crds for cluster workload in 972.991959ms

rendered platform in 1.284129042s

We can see the changes clearly with git.

- Command

- Output

git status

On branch jeff/251-deploy-service

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: deploy/clusters/local/components/app-projects/app-projects.gen.yaml

modified: deploy/clusters/local/components/namespaces/namespaces.gen.yaml

Untracked files:

(use "git add <file>..." to include in what will be committed)

projects/migration.cue

no changes added to commit (use "git add" and/or "git commit -a")

- Command

- Output

git diff deploy

diff --git a/deploy/clusters/local/components/app-projects/app-projects.gen.yaml b/deploy/clusters/local/components/app-projects/app-projects.gen.yaml

index bdc8371..42cb01a 100644

--- a/deploy/clusters/local/components/app-projects/app-projects.gen.yaml

+++ b/deploy/clusters/local/components/app-projects/app-projects.gen.yaml

@@ -50,6 +50,23 @@ spec:

sourceRepos:

- '*'

---

+apiVersion: argoproj.io/v1alpha1

+kind: AppProject

+metadata:

+ name: migration

+ namespace: argocd

+spec:

+ clusterResourceWhitelist:

+ - group: '*'

+ kind: '*'

+ description: Holos managed AppProject

+ destinations:

+ - namespace: '*'

+ server: '*'

+ sourceRepos:

+ - '*'

+---

apiVersion: argoproj.io/v1alpha1

kind: AppProject

diff --git a/deploy/clusters/local/components/namespaces/namespaces.gen.yaml b/deploy/clusters/local/components/namespaces/namespaces.gen.yaml

index de96ab9..7ddd870 100644

--- a/deploy/clusters/local/components/namespaces/namespaces.gen.yaml

+++ b/deploy/clusters/local/components/namespaces/namespaces.gen.yaml

@@ -62,3 +62,11 @@ metadata:

kubernetes.io/metadata.name: istio-system

kind: Namespace

apiVersion: v1

+---

+metadata:

+ name: migration

+ labels:

+ kubernetes.io/metadata.name: migration

+kind: Namespace

+apiVersion: v1

We can see how adding a new file with a couple of lines created the Namespace and AppProject resource the development team needs to start the migration. The development team didn't need to think about the details of what goes into a Namespace or an AppProject resource, they simply added a file expressing they need these resources.

Because all configuration in CUE is unified, both the platform team and development team can work safely together. The platform team defines the shape of the Namespace and AppProject, the development team registers them.

At the bank, a code owners file can further optimize self-service and

collaboration. Files added to the projects/ directory can automatically

request an approval from the platform team and block merge from other teams.

Let's add and commit these changes.

- Command

- Output

git add projects/migration.cue deploy

git commit -m 'manage a namespace for the migration project'

[main 6fb70b3] manage a namespace for the migration project

3 files changed, 38 insertions(+)

create mode 100644 projects/migration.cue

Now that we have a Namespace, we're ready to add a component to migrate the podinfo service to the platform.

Helm Component

Let's imagine the service we're migrating was deployed with a Helm chart. We'll use the upstream podinfo helm chart as a stand in for the chart we're migrating to the bank's platform. We'll wrap the helm chart in a Helm component to migrate it onto the bank's platform.

We'll start by creating a directory for the component.

mkdir -p projects/migration/components/podinfo

We use projects/migration so we have a place to add CUE files that affect all

migration project components. CUE files for components are easily moved into

sub-directories, for example a web tier and a database tier. Starting a project

with one components/ sub-directory is a good way to get going and easy to

change later.

Components are usually organized into a file system tree reflecting the owner of groups of component. We do this to support code owners and limit the scope of configuration changes.

Next, create the projects/migration/components/podinfo/podinfo.cue file with

the following content.

- projects/migration/components/podinfo/podinfo.cue

package holos

import ks "sigs.k8s.io/kustomize/api/types"

// Produce a helm chart build plan.

_Helm.BuildPlan

_Helm: #Helm & {

Name: "podinfo"

Namespace: _Migration.Namespace

Chart: {

version: "6.6.2"

repository: {

name: "podinfo"

url: "https://stefanprodan.github.io/podinfo"

}

}

KustomizeConfig: Kustomization: ks.#Kustomization & {

namespace: Namespace

}

// Allow the platform team to route traffic into our namespace.

Resources: ReferenceGrant: grant: _ReferenceGrant & {

metadata: namespace: Namespace

}

}

Line 3: We import the type definitions for a Kustomization from the

kubernetes project to type check the file we write out on line 15. Type

definitions have already been imported into the bank-of-holos repository.

When we work with Kubernetes resources we often need to import their type

definitions using cue get go or timoni mod vendor crds.

Line 6: This component produces a BuildPlan that wraps a Helm Chart.

Line 9: The name of the component is podinfo. Holos uses the Component's

Name as the sub-directory name when it writes the rendered manifest into

deploy/. Normally this name also matches the directory and file name of the

component, podinfo/podinfo.cue, but holos doesn't enforce this convention.

Line 10: We use the same namespace we registered with the namespaces

component as the value we pass to Helm. This is a good example of Holos

offering safety and consistency with CUE, if we change the value of

_Migration.Namespace, multiple components stay consistent.

Lines 21: Unfortunately, the Helm chart doesn't set the

metadata.namespace field for the resources it generates, which creates a

security problem. The resources will be created in the wrong namespace. We

don't want to modify the upstream chart because it creates a maintenance burden.

We solve the problem by having Holos post-process the Helm output with

Kustomize. This ensures all resources go into the correct namespace.

Lines 26: The migration team grants the platform team permission to route

traffic into the migration Namespace using a ReferenceGrant.

Notice this is also the first time we've seen CUE's & unification operator

used to type-check a struct.

ks.#Kustomization & { namespace: Namespace }

Holos makes it easy for the migration team to mix-in the ReferenceGrant to the Helm chart from the company the bank acquired.

This is a good example of how Holos enables multiple teams working together efficiently. In this example the migration team adds a resource to grant access to the platform team to integrate a service from an acquired company into the bank's platform.

Unification ensures holos render platform fails quickly if the value does not

pass type checking against the official Kustomization API spec. We'll see

this often in Holos, unification with type definitions makes changes safer and

easier.

Quite a few upstream vendor Helm charts don't set the metadata.namespace,

creating problems like this. Keep the EnableKustomizePostProcessor feature in

mind if you've run into this problem before.

Our new podinfo component needs to be registered so it will be rendered with the

platform. We could render the new component directly with holos render component, but it's usually faster and easier to register it and render the

whole platform. This way we get an early indicator of how it's going to

integrate with the whole. If you've ever spent considerable time building

something only to have it take weeks to integrate with the rest of your

organization, you've probably felt pain then the relief integrating early and

often brings.

Register the new component by creating platform/migration-podinfo.cue with the

following content.

- platform/migration-podinfo.cue

package holos

// Manage on workload clusters only

for Cluster in _Fleets.workload.clusters {

_Platform: Components: "\(Cluster.name)/podinfo": {

name: "podinfo"

component: "projects/migration/components/podinfo"

cluster: Cluster.name

}

}

The behavior of files in the platform/ directory is covered in detail in the

how platform rendering works section of the Quickstart guide.

Before we render the platform, we want to make sure our podinfo component, and all future migration project components, are automatically associated with the ArgoCD AppProject we managed along side the Namespace for the project.

Create projects/migration/app-project.cue with the following content.

- projects/migration/app-project.cue

package holos

// Assign ArgoCD Applications to the migration AppProject

_ArgoConfig: AppProject: _AppProjects.migration.metadata.name

This file provides consistency and safety in a number of ways:

- All components under

projects/migration/will automatically have their ArgoCD Application assigned to the migrationAppProject. holos render platformerrors out if_AppProjects.migrationis not defined, we defined it inprojects/migration.cue- The platform team is responsible for managing the

AppProjectresource itself, the team doing the migration refers to themetadata.namefield defined by the platform team.

Let's render the platform and see if our migrated service works.

- Command

- Output

holos render platform ./platform

rendered bank-ledger-db for cluster workload in 150.004875ms

rendered bank-accounts-db for cluster workload in 150.807042ms

rendered bank-ledger-writer for cluster workload in 158.850125ms

rendered bank-userservice for cluster workload in 163.657375ms

rendered bank-balance-reader for cluster workload in 167.437625ms

rendered bank-backend-config for cluster workload in 175.174542ms

rendered bank-secrets for cluster workload in 213.723917ms

rendered gateway for cluster workload in 124.668542ms

rendered httproutes for cluster workload in 138.059917ms

rendered bank-contacts for cluster workload in 153.5245ms

rendered bank-transaction-history for cluster workload in 154.283458ms

rendered bank-frontend for cluster workload in 313.496583ms

rendered app-projects for cluster workload in 110.870083ms

rendered cni for cluster workload in 598.301167ms

rendered ztunnel for cluster workload in 523.686958ms

rendered cert-manager for cluster workload in 436.820125ms

rendered external-secrets for cluster workload in 128.181125ms

rendered istiod for cluster workload in 836.051416ms

rendered argocd for cluster workload in 718.162666ms

rendered local-ca for cluster workload in 95.567583ms

rendered podinfo for cluster workload in 743.459292ms

rendered gateway-api for cluster workload in 223.475125ms

rendered base for cluster workload in 956.264625ms

rendered namespaces for cluster workload in 113.534792ms

rendered external-secrets-crds for cluster workload in 598.5455ms

rendered crds for cluster workload in 1.399488458s

rendered platform in 1.704023375s

The platform rendered without error and we see, rendered podinfo for cluster workload in 743.459292ms. Let's take a look at the output manifests.

- Command

- Output

git add .

git status

On branch jeff/291-consistent-fields

Changes to be committed:

(use "git restore --staged <file>..." to unstage)

new file: deploy/clusters/local/components/podinfo/podinfo.gen.yaml

new file: deploy/clusters/local/gitops/podinfo.gen.yaml

new file: platform/migration-podinfo.cue

new file: projects/migration/app-project.cue

new file: projects/migration/components/podinfo/podinfo.cue

Adding the platform and component CUE files and rendering the platform results in a new manifest for the Helm output along with an ArgoCD Application for GitOps. Here's what they look like:

- podinfo.gen.yaml

- podinfo.gen.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

labels:

holos.run/component.name: podinfo

name: podinfo

namespace: argocd

spec:

destination:

server: https://kubernetes.default.svc

project: migration

source:

path: deploy/clusters/local/components/podinfo

repoURL: https://github.com/holos-run/bank-of-holos

targetRevision: main

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: podinfo

app.kubernetes.io/version: 6.6.2

argocd.argoproj.io/instance: podinfo

helm.sh/chart: podinfo-6.6.2

holos.run/component.name: podinfo

name: podinfo

namespace: migration

spec:

ports:

- name: http

port: 9898

protocol: TCP

targetPort: http

- name: grpc

port: 9999

protocol: TCP

targetPort: grpc

selector:

app.kubernetes.io/name: podinfo

argocd.argoproj.io/instance: podinfo

holos.run/component.name: podinfo

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: podinfo

app.kubernetes.io/version: 6.6.2

argocd.argoproj.io/instance: podinfo

helm.sh/chart: podinfo-6.6.2

holos.run/component.name: podinfo

name: podinfo

namespace: migration

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: podinfo

argocd.argoproj.io/instance: podinfo

holos.run/component.name: podinfo

strategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

annotations:

prometheus.io/port: "9898"

prometheus.io/scrape: "true"

labels:

app.kubernetes.io/name: podinfo

argocd.argoproj.io/instance: podinfo

holos.run/component.name: podinfo

spec:

containers:

- command:

- ./podinfo

- --port=9898

- --cert-path=/data/cert

- --port-metrics=9797

- --grpc-port=9999

- --grpc-service-name=podinfo

- --level=info

- --random-delay=false

- --random-error=false

env:

- name: PODINFO_UI_COLOR

value: '#34577c'

image: ghcr.io/stefanprodan/podinfo:6.6.2

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- podcli

- check

- http

- localhost:9898/healthz

failureThreshold: 3

initialDelaySeconds: 1

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: podinfo

ports:

- containerPort: 9898

name: http

protocol: TCP

- containerPort: 9797

name: http-metrics

protocol: TCP

- containerPort: 9999

name: grpc

protocol: TCP

readinessProbe:

exec:

command:

- podcli

- check

- http

- localhost:9898/readyz

failureThreshold: 3

initialDelaySeconds: 1

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

resources:

limits: null

requests:

cpu: 1m

memory: 16Mi

volumeMounts:

- mountPath: /data

name: data

terminationGracePeriodSeconds: 30

volumes:

- emptyDir: {}

name: data

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: ReferenceGrant

metadata:

labels:

argocd.argoproj.io/instance: podinfo

holos.run/component.name: podinfo

name: istio-ingress

namespace: migration

spec:

from:

- group: gateway.networking.k8s.io

kind: HTTPRoute

namespace: istio-ingress

to:

- group: ""

kind: Service

Let's commit these changes and explore how we can manage Helm values more safely now that the chart is managed with Holos.

- Command

- Output

git add .

git commit -m 'register the migration project podinfo component with the platform'

[main 31197e2] register the migration project podinfo component with the platform

5 files changed, 205 insertions(+)

create mode 100644 deploy/clusters/local/components/podinfo/podinfo.gen.yaml

create mode 100644 deploy/clusters/local/gitops/podinfo.gen.yaml

create mode 100644 platform/migration-podinfo.cue

create mode 100644 projects/migration/app-project.cue

create mode 100644 projects/migration/components/podinfo/podinfo.cue

And push the changes.

- Command

- Output

git push

Total 0 (delta 0), reused 0 (delta 0), pack-reused 0

To github.com:jeffmccune/bank-of-holos.git

6fb70b3..b40879f main -> main

We often need to import the chart's values.yaml file when we manage a Helm

chart with Holos.

We'll cover this in depth in another guide. For now the important thing to know

is we use cue import to import a values.yaml file into CUE. Holos caches

charts in the vendor/ directory of the component if you need to import

values.yaml for your own chart before the guide is published.

Expose the Service

We've migrated the Deployment and Service using the podinfo Helm chart we got

from the company the bank acquired, but we still haven't exposed the service.

Let's see how Holos makes it easier and safer to integrate components into the

platform as a whole. Bank of Holos uses HTTPRoute routes from the new Gateway

API. The company the bank acquired uses older Ingress resources from earlier

Kubernetes versions.

The platform team at the bank defines a _HTTPRoutes struct for other teams at

the bank to register with. The _HTTPRoutes struct is similar to the

_Namespaces and _AppProjects structs we've already seen.

As a member of the migration team, we'll add the file

projects/migration-routes.cue to expose the service we're migrating.

Go ahead and create this file (if it hasn't been created previously) with the following content.

- projects/migration-routes.cue

- projects/httproutes.cue

package holos

// Route migration-podinfo.example.com to port 9898 of Service podinfo in the

// migration namespace.

_HTTPRoutes: "migration-podinfo": _backendRefs: {

podinfo: {

port: 9898

namespace: _Migration.Namespace

}

}

package holos

import v1 "gateway.networking.k8s.io/httproute/v1"

// Struct containing HTTPRoute configurations. These resources are managed in

// the istio-ingress namespace. Other components define the routes they need

// close to the root of configuration.

_HTTPRoutes: #HTTPRoutes

// #HTTPRoutes defines the schema of managed HTTPRoute resources for the

// platform.

#HTTPRoutes: {

// For the guides, we simplify this down to a flat namespace.

[Name=string]: v1.#HTTPRoute & {

let HOST = Name + "." + _Organization.Domain

_backendRefs: [NAME=string]: {

name: NAME

namespace: string

port: number | *80

}

metadata: name: Name

metadata: namespace: _Istio.Gateway.Namespace

metadata: labels: app: Name

spec: hostnames: [HOST]

spec: parentRefs: [{

name: "default"

namespace: metadata.namespace

}]

spec: rules: [

{

matches: [{path: {type: "PathPrefix", value: "/"}}]

backendRefs: [for x in _backendRefs {x}]

},

]

}

}

In this file we're adding a field to the _HTTPRoutes struct the platform team

defined for us.

You might be wondering how we knew all of these fields to put into this file.

Often, the platform team provides instructions like this guide, or we can also

look directly at how they defined the struct in the projects/httproutes.cue

file.

There's a few new concepts to notice in the projects/httproutes.cue file.

The most important things the migration team takes away from this file are:

- The platform team requires a

gateway.networking.k8s.io/httproute/v1HTTPRoute. - Line 17 uses a hidden field so we can provide backend references as a struct instead of a list.

- Line 34 uses a comprehension to convert the struct to a list.

We can look up the spec for the fields we need to provide in the Gateway API reference documentation for HTTPRoute.

Lists are more difficult to work with in CUE than structs because they're ordered. Prefer structs to lists so fields can be unified across many files owned by many teams.

You'll see this pattern again and again. The pattern is to use a hidden field to take input and pair it with a comprehension to produce output for an API that expects a list.

Let's render the platform to see the fully rendered HTTPRoute.

- Command

- Output

holos render platform ./platform

rendered bank-ledger-db for cluster workload in 142.939375ms

rendered bank-accounts-db for cluster workload in 151.787959ms

rendered bank-ledger-writer for cluster workload in 161.074917ms

rendered bank-balance-reader for cluster workload in 161.303833ms

rendered bank-userservice for cluster workload in 161.752667ms

rendered bank-backend-config for cluster workload in 169.740959ms

rendered bank-secrets for cluster workload in 207.505417ms

rendered gateway for cluster workload in 122.961334ms

rendered bank-frontend for cluster workload in 306.386333ms

rendered bank-transaction-history for cluster workload in 164.252042ms

rendered bank-contacts for cluster workload in 155.57775ms

rendered httproutes for cluster workload in 154.3695ms

rendered cni for cluster workload in 154.227458ms

rendered istiod for cluster workload in 221.045542ms

rendered ztunnel for cluster workload in 147.59975ms

rendered app-projects for cluster workload in 127.480333ms

rendered podinfo for cluster workload in 184.9705ms

rendered base for cluster workload in 359.061208ms

rendered cert-manager for cluster workload in 189.696042ms

rendered external-secrets for cluster workload in 148.129083ms

rendered local-ca for cluster workload in 104.72ms

rendered argocd for cluster workload in 294.485375ms

rendered namespaces for cluster workload in 122.247042ms

rendered gateway-api for cluster workload in 219.049958ms

rendered external-secrets-crds for cluster workload in 340.379125ms

rendered crds for cluster workload in 424.435083ms

rendered platform in 732.025125ms

Git diff shows us what changed.

- Command

- Output

git diff

diff --git a/deploy/clusters/local/components/httproutes/httproutes.gen.yaml b/deploy/clusters/local/components/httproutes/httproutes.gen.yaml

index 06f7c91..349e070 100644

--- a/deploy/clusters/local/components/httproutes/httproutes.gen.yaml

+++ b/deploy/clusters/local/components/httproutes/httproutes.gen.yaml

@@ -47,3 +47,28 @@ spec:

- path:

type: PathPrefix

value: /

+---

+apiVersion: gateway.networking.k8s.io/v1

+kind: HTTPRoute

+metadata:

+ labels:

+ app: migration-podinfo

+ argocd.argoproj.io/instance: httproutes

+ holos.run/component.name: httproutes

+ name: migration-podinfo

+ namespace: istio-ingress

+spec:

+ hostnames:

+ - migration-podinfo.holos.localhost

+ parentRefs:

+ - name: default

+ namespace: istio-ingress

+ rules:

+ - backendRefs:

+ - name: podinfo

+ namespace: migration

+ port: 9898

+ matches:

+ - path:

+ type: PathPrefix

+ value: /

We see the HTTPRoute rendered by the httproutes component owned by the

platform team will expose our service at

https://migration-podinfo.holos.localhost and route requests to port 9898 of the

podinfo Service in our migration Namespace

At this point the migration team might submit a pull request, which could trigger a code review from the platform team.

The platform team is likely very busy. Holos and CUE performs strong type

checking on this HTTPRoute, so the platform team may automate this approval

with a pull request check, or not need a cross-functional review at all.

Cross functional changes are safer and easier because the migration team cannot render the platform unless the HTTPRoute is valid as defined by the platform team.

This all looks good, so let's commit the changes and try it out.

- Command

- Output

git add .

git commit -m 'add httproute for migration-podinfo.holos.localhost'

[main 843e429] add httproute for migration-podinfo.holos.localhost

2 files changed, 37 insertions(+)

create mode 100644 projects/migration-routes.cue

And push the changes for GitOps.

- Command

- Output

git push

Enumerating objects: 18, done.

Counting objects: 100% (18/18), done.

Delta compression using up to 14 threads

Compressing objects: 100% (7/7), done.

Writing objects: 100% (10/10), 1.59 KiB | 1.59 MiB/s, done.

Total 10 (delta 4), reused 0 (delta 0), pack-reused 0

remote: Resolving deltas: 100% (4/4), completed with 4 local objects.

To github.com:jeffmccune/bank-of-holos.git

b40879f..843e429 main -> main

Apply the Manifests

Now that we've integrated the podinfo service from the acquired company into the bank's platform, let's apply the configuration and deploy the service. We'll apply the manifests in a specific order to get the whole cluster up quickly.

Let's get the Bank of Holos platform up and running so we can see if migrating

the podinfo Helm chart from the acquired company works. Run the apply script

in the bank-of-holos repository after resetting your cluster following the

Local Cluster Guide.

- Command

- Output

./scripts/apply

+ kubectl apply --server-side=true -f deploy/clusters/local/components/namespaces/namespaces.gen.yaml

namespace/argocd serverside-applied

namespace/bank-backend serverside-applied

namespace/bank-frontend serverside-applied

namespace/bank-security serverside-applied

namespace/cert-manager serverside-applied

namespace/external-secrets serverside-applied

namespace/istio-ingress serverside-applied

namespace/istio-system serverside-applied

namespace/migration serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/argocd-crds/argocd-crds.gen.yaml

customresourcedefinition.apiextensions.k8s.io/applications.argoproj.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/applicationsets.argoproj.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/appprojects.argoproj.io serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/gateway-api/gateway-api.gen.yaml

customresourcedefinition.apiextensions.k8s.io/gatewayclasses.gateway.networking.k8s.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/gateways.gateway.networking.k8s.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/grpcroutes.gateway.networking.k8s.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/httproutes.gateway.networking.k8s.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/referencegrants.gateway.networking.k8s.io serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/external-secrets-crds/external-secrets-crds.gen.yaml

customresourcedefinition.apiextensions.k8s.io/acraccesstokens.generators.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/clusterexternalsecrets.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/clustersecretstores.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/ecrauthorizationtokens.generators.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/externalsecrets.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/fakes.generators.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/gcraccesstokens.generators.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/githubaccesstokens.generators.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/passwords.generators.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/pushsecrets.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/secretstores.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/uuids.generators.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/vaultdynamicsecrets.generators.external-secrets.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/webhooks.generators.external-secrets.io serverside-applied

+ kubectl wait --for=condition=Established crd --all --timeout=300s

customresourcedefinition.apiextensions.k8s.io/acraccesstokens.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/addons.k3s.cattle.io condition met

customresourcedefinition.apiextensions.k8s.io/applications.argoproj.io condition met

customresourcedefinition.apiextensions.k8s.io/applicationsets.argoproj.io condition met

customresourcedefinition.apiextensions.k8s.io/appprojects.argoproj.io condition met

customresourcedefinition.apiextensions.k8s.io/authorizationpolicies.security.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io condition met

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io condition met

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io condition met

customresourcedefinition.apiextensions.k8s.io/clusterexternalsecrets.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io condition met

customresourcedefinition.apiextensions.k8s.io/clustersecretstores.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/destinationrules.networking.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/ecrauthorizationtokens.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/envoyfilters.networking.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/etcdsnapshotfiles.k3s.cattle.io condition met

customresourcedefinition.apiextensions.k8s.io/externalsecrets.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/fakes.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/gatewayclasses.gateway.networking.k8s.io condition met

customresourcedefinition.apiextensions.k8s.io/gateways.gateway.networking.k8s.io condition met

customresourcedefinition.apiextensions.k8s.io/gateways.networking.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/gcraccesstokens.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/githubaccesstokens.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/grpcroutes.gateway.networking.k8s.io condition met

customresourcedefinition.apiextensions.k8s.io/helmchartconfigs.helm.cattle.io condition met

customresourcedefinition.apiextensions.k8s.io/helmcharts.helm.cattle.io condition met

customresourcedefinition.apiextensions.k8s.io/httproutes.gateway.networking.k8s.io condition met

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io condition met

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io condition met

customresourcedefinition.apiextensions.k8s.io/passwords.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/peerauthentications.security.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/proxyconfigs.networking.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/pushsecrets.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/referencegrants.gateway.networking.k8s.io condition met

customresourcedefinition.apiextensions.k8s.io/requestauthentications.security.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/secretstores.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/serviceentries.networking.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/sidecars.networking.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/telemetries.telemetry.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/uuids.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/vaultdynamicsecrets.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/virtualservices.networking.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/wasmplugins.extensions.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/webhooks.generators.external-secrets.io condition met

customresourcedefinition.apiextensions.k8s.io/workloadentries.networking.istio.io condition met

customresourcedefinition.apiextensions.k8s.io/workloadgroups.networking.istio.io condition met

+ kubectl apply --server-side=true -f deploy/clusters/local/components/external-secrets/external-secrets.gen.yaml

serviceaccount/external-secrets-cert-controller serverside-applied

serviceaccount/external-secrets serverside-applied

serviceaccount/external-secrets-webhook serverside-applied

secret/external-secrets-webhook serverside-applied

clusterrole.rbac.authorization.k8s.io/external-secrets-cert-controller serverside-applied

clusterrole.rbac.authorization.k8s.io/external-secrets-controller serverside-applied

clusterrole.rbac.authorization.k8s.io/external-secrets-view serverside-applied

clusterrole.rbac.authorization.k8s.io/external-secrets-edit serverside-applied

clusterrole.rbac.authorization.k8s.io/external-secrets-servicebindings serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/external-secrets-cert-controller serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/external-secrets-controller serverside-applied

role.rbac.authorization.k8s.io/external-secrets-leaderelection serverside-applied

rolebinding.rbac.authorization.k8s.io/external-secrets-leaderelection serverside-applied

service/external-secrets-webhook serverside-applied

deployment.apps/external-secrets-cert-controller serverside-applied

deployment.apps/external-secrets serverside-applied

deployment.apps/external-secrets-webhook serverside-applied

validatingwebhookconfiguration.admissionregistration.k8s.io/secretstore-validate serverside-applied

validatingwebhookconfiguration.admissionregistration.k8s.io/externalsecret-validate serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/cert-manager/cert-manager.gen.yaml

serviceaccount/cert-manager-cainjector serverside-applied

serviceaccount/cert-manager serverside-applied

serviceaccount/cert-manager-webhook serverside-applied

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-cainjector serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-issuers serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificates serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-orders serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-challenges serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-cluster-view serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-view serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-edit serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests serverside-applied

clusterrole.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-cainjector serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-issuers serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificates serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-orders serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-challenges serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews serverside-applied

role.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection serverside-applied

role.rbac.authorization.k8s.io/cert-manager:leaderelection serverside-applied

role.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving serverside-applied

rolebinding.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection serverside-applied

rolebinding.rbac.authorization.k8s.io/cert-manager:leaderelection serverside-applied

rolebinding.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving serverside-applied

service/cert-manager serverside-applied

service/cert-manager-webhook serverside-applied

deployment.apps/cert-manager-cainjector serverside-applied

deployment.apps/cert-manager serverside-applied

deployment.apps/cert-manager-webhook serverside-applied

mutatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook serverside-applied

validatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/local-ca/local-ca.gen.yaml

clusterissuer.cert-manager.io/local-ca serverside-applied

+ kubectl wait --for=condition=Ready clusterissuer/local-ca --timeout=30s

clusterissuer.cert-manager.io/local-ca condition met

+ kubectl apply --server-side=true -f deploy/clusters/local/components/argocd/argocd.gen.yaml

serviceaccount/argocd-application-controller serverside-applied

serviceaccount/argocd-applicationset-controller serverside-applied

serviceaccount/argocd-notifications-controller serverside-applied

serviceaccount/argocd-redis-secret-init serverside-applied

serviceaccount/argocd-repo-server serverside-applied

serviceaccount/argocd-server serverside-applied

role.rbac.authorization.k8s.io/argocd-application-controller serverside-applied

role.rbac.authorization.k8s.io/argocd-applicationset-controller serverside-applied

role.rbac.authorization.k8s.io/argocd-notifications-controller serverside-applied

role.rbac.authorization.k8s.io/argocd-redis-secret-init serverside-applied

role.rbac.authorization.k8s.io/argocd-repo-server serverside-applied

role.rbac.authorization.k8s.io/argocd-server serverside-applied

clusterrole.rbac.authorization.k8s.io/argocd-application-controller serverside-applied

clusterrole.rbac.authorization.k8s.io/argocd-notifications-controller serverside-applied

clusterrole.rbac.authorization.k8s.io/argocd-server serverside-applied

rolebinding.rbac.authorization.k8s.io/argocd-application-controller serverside-applied

rolebinding.rbac.authorization.k8s.io/argocd-applicationset-controller serverside-applied

rolebinding.rbac.authorization.k8s.io/argocd-notifications-controller serverside-applied

rolebinding.rbac.authorization.k8s.io/argocd-redis-secret-init serverside-applied

rolebinding.rbac.authorization.k8s.io/argocd-repo-server serverside-applied

rolebinding.rbac.authorization.k8s.io/argocd-server serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/argocd-application-controller serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/argocd-notifications-controller serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/argocd-server serverside-applied

configmap/argocd-cm serverside-applied

configmap/argocd-cmd-params-cm serverside-applied

configmap/argocd-gpg-keys-cm serverside-applied

configmap/argocd-notifications-cm serverside-applied

configmap/argocd-rbac-cm serverside-applied

configmap/argocd-redis-health-configmap serverside-applied

configmap/argocd-ssh-known-hosts-cm serverside-applied

configmap/argocd-tls-certs-cm serverside-applied

secret/argocd-notifications-secret serverside-applied

secret/argocd-secret serverside-applied

service/argocd-applicationset-controller serverside-applied

service/argocd-redis serverside-applied

service/argocd-repo-server serverside-applied

service/argocd-server serverside-applied

deployment.apps/argocd-applicationset-controller serverside-applied

deployment.apps/argocd-notifications-controller serverside-applied

deployment.apps/argocd-redis serverside-applied

deployment.apps/argocd-repo-server serverside-applied

deployment.apps/argocd-server serverside-applied

statefulset.apps/argocd-application-controller serverside-applied

job.batch/argocd-redis-secret-init serverside-applied

referencegrant.gateway.networking.k8s.io/istio-ingress serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/app-projects/app-projects.gen.yaml

appproject.argoproj.io/bank-backend serverside-applied

appproject.argoproj.io/bank-frontend serverside-applied

appproject.argoproj.io/bank-security serverside-applied

appproject.argoproj.io/migration serverside-applied

appproject.argoproj.io/platform serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/istio-base/istio-base.gen.yaml

customresourcedefinition.apiextensions.k8s.io/authorizationpolicies.security.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/destinationrules.networking.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/envoyfilters.networking.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/gateways.networking.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/peerauthentications.security.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/proxyconfigs.networking.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/requestauthentications.security.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/serviceentries.networking.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/sidecars.networking.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/telemetries.telemetry.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/virtualservices.networking.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/wasmplugins.extensions.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/workloadentries.networking.istio.io serverside-applied

customresourcedefinition.apiextensions.k8s.io/workloadgroups.networking.istio.io serverside-applied

serviceaccount/istio-reader-service-account serverside-applied

validatingwebhookconfiguration.admissionregistration.k8s.io/istiod-default-validator serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/istiod/istiod.gen.yaml

serviceaccount/istiod serverside-applied

role.rbac.authorization.k8s.io/istiod serverside-applied

clusterrole.rbac.authorization.k8s.io/istio-reader-clusterrole-istio-system serverside-applied

clusterrole.rbac.authorization.k8s.io/istiod-clusterrole-istio-system serverside-applied

clusterrole.rbac.authorization.k8s.io/istiod-gateway-controller-istio-system serverside-applied

rolebinding.rbac.authorization.k8s.io/istiod serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/istio-reader-clusterrole-istio-system serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/istiod-clusterrole-istio-system serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/istiod-gateway-controller-istio-system serverside-applied

configmap/istio serverside-applied

configmap/istio-sidecar-injector serverside-applied

service/istiod serverside-applied

deployment.apps/istiod serverside-applied

poddisruptionbudget.policy/istiod serverside-applied

horizontalpodautoscaler.autoscaling/istiod serverside-applied

mutatingwebhookconfiguration.admissionregistration.k8s.io/istio-sidecar-injector serverside-applied

validatingwebhookconfiguration.admissionregistration.k8s.io/istio-validator-istio-system serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/istio-cni/istio-cni.gen.yaml

serviceaccount/istio-cni serverside-applied

configmap/istio-cni-config serverside-applied

clusterrole.rbac.authorization.k8s.io/istio-cni serverside-applied

clusterrole.rbac.authorization.k8s.io/istio-cni-repair-role serverside-applied

clusterrole.rbac.authorization.k8s.io/istio-cni-ambient serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/istio-cni serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/istio-cni-repair-rolebinding serverside-applied

clusterrolebinding.rbac.authorization.k8s.io/istio-cni-ambient serverside-applied

daemonset.apps/istio-cni-node serverside-applied

+ kubectl wait --for=condition=Ready pod -l k8s-app=istio-cni-node --timeout=300s -n istio-system

pod/istio-cni-node-7kfbh condition met

+ kubectl apply --server-side=true -f deploy/clusters/local/components/istio-ztunnel/istio-ztunnel.gen.yaml

serviceaccount/ztunnel serverside-applied

daemonset.apps/ztunnel serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/istio-gateway/istio-gateway.gen.yaml

certificate.cert-manager.io/gateway-cert serverside-applied

gateway.gateway.networking.k8s.io/default serverside-applied

serviceaccount/default-istio serverside-applied

+ kubectl wait --for=condition=Ready pod -l istio.io/gateway-name=default --timeout=300s -n istio-ingress

pod/default-istio-54598d985b-69wmr condition met

+ kubectl apply --server-side=true -f deploy/clusters/local/components/httproutes/httproutes.gen.yaml

httproute.gateway.networking.k8s.io/argocd serverside-applied

httproute.gateway.networking.k8s.io/bank serverside-applied

httproute.gateway.networking.k8s.io/migration-podinfo serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-secrets/bank-secrets.gen.yaml

configmap/jwt-key-writer serverside-applied

job.batch/jwt-key-writer serverside-applied

role.rbac.authorization.k8s.io/jwt-key-reader serverside-applied

role.rbac.authorization.k8s.io/jwt-key-writer serverside-applied

rolebinding.rbac.authorization.k8s.io/jwt-key-reader serverside-applied

rolebinding.rbac.authorization.k8s.io/jwt-key-writer serverside-applied

serviceaccount/jwt-key-writer serverside-applied

+ kubectl wait --for=condition=complete job.batch/jwt-key-writer -n bank-security --timeout=300s

job.batch/jwt-key-writer condition met

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-backend-config/bank-backend-config.gen.yaml

configmap/demo-data-config serverside-applied

configmap/environment-config serverside-applied

configmap/service-api-config serverside-applied

externalsecret.external-secrets.io/jwt-key serverside-applied

referencegrant.gateway.networking.k8s.io/istio-ingress serverside-applied

secretstore.external-secrets.io/bank-security serverside-applied

serviceaccount/bank-of-holos serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-accounts-db/bank-accounts-db.gen.yaml

configmap/accounts-db-config serverside-applied

service/accounts-db serverside-applied

statefulset.apps/accounts-db serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-ledger-db/bank-ledger-db.gen.yaml

configmap/ledger-db-config serverside-applied

service/ledger-db serverside-applied

statefulset.apps/ledger-db serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-contacts/bank-contacts.gen.yaml

deployment.apps/contacts serverside-applied

service/contacts serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-balance-reader/bank-balance-reader.gen.yaml

deployment.apps/balancereader serverside-applied

service/balancereader serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-userservice/bank-userservice.gen.yaml

deployment.apps/userservice serverside-applied

service/userservice serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-ledger-writer/bank-ledger-writer.gen.yaml

deployment.apps/ledgerwriter serverside-applied

service/ledgerwriter serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-transaction-history/bank-transaction-history.gen.yaml

deployment.apps/transactionhistory serverside-applied

service/transactionhistory serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/components/bank-frontend/bank-frontend.gen.yaml

configmap/demo-data-config serverside-applied

configmap/environment-config serverside-applied

configmap/service-api-config serverside-applied

deployment.apps/frontend serverside-applied

externalsecret.external-secrets.io/jwt-key serverside-applied

referencegrant.gateway.networking.k8s.io/istio-ingress serverside-applied

secretstore.external-secrets.io/bank-security serverside-applied

service/frontend serverside-applied

serviceaccount/bank-of-holos serverside-applied

+ kubectl apply --server-side=true -f deploy/clusters/local/gitops

application.argoproj.io/app-projects serverside-applied

application.argoproj.io/argocd-crds serverside-applied

application.argoproj.io/argocd serverside-applied

application.argoproj.io/bank-accounts-db serverside-applied

application.argoproj.io/bank-backend-config serverside-applied

application.argoproj.io/bank-balance-reader serverside-applied

application.argoproj.io/bank-contacts serverside-applied

application.argoproj.io/bank-frontend serverside-applied

application.argoproj.io/bank-ledger-db serverside-applied

application.argoproj.io/bank-ledger-writer serverside-applied

application.argoproj.io/bank-secrets serverside-applied

application.argoproj.io/bank-transaction-history serverside-applied

application.argoproj.io/bank-userservice serverside-applied

application.argoproj.io/cert-manager serverside-applied

application.argoproj.io/external-secrets-crds serverside-applied

application.argoproj.io/external-secrets serverside-applied

application.argoproj.io/gateway-api serverside-applied

application.argoproj.io/httproutes serverside-applied

application.argoproj.io/istio-base serverside-applied

application.argoproj.io/istio-cni serverside-applied

application.argoproj.io/istio-gateway serverside-applied

application.argoproj.io/istio-ztunnel serverside-applied

application.argoproj.io/istiod serverside-applied

application.argoproj.io/local-ca serverside-applied

application.argoproj.io/namespaces serverside-applied

application.argoproj.io/podinfo serverside-applied

+ exit 0

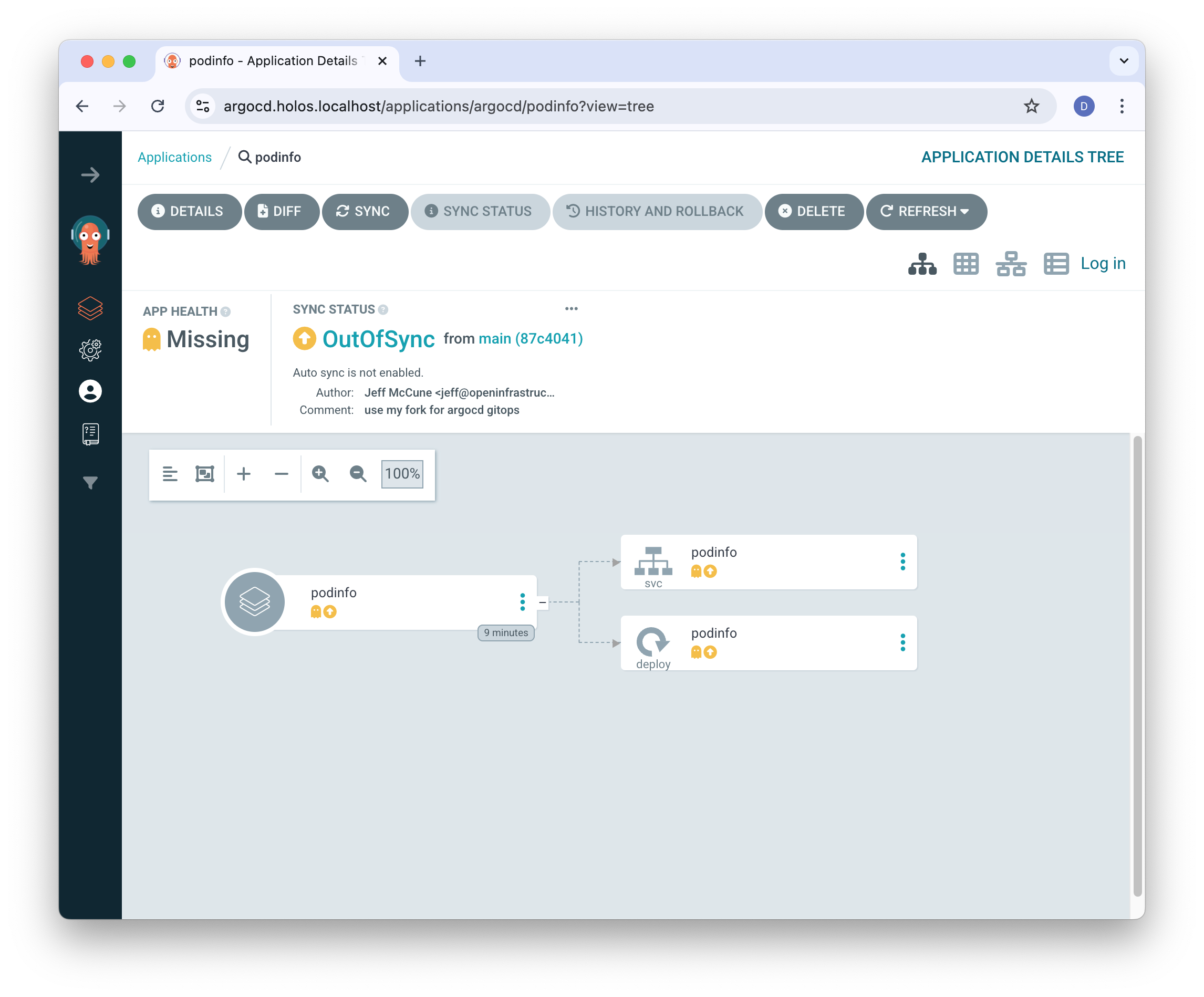

Browse to https://argocd.holos.localhost/applications/argocd/podinfo and we'll see our podinfo component is missing in the cluster.

If ArgoCD doesn't look like this for you, double check you've configured ArgoCD

to use your RepoURL in projects/argocd-config.cue as we did in the

Quickstart.

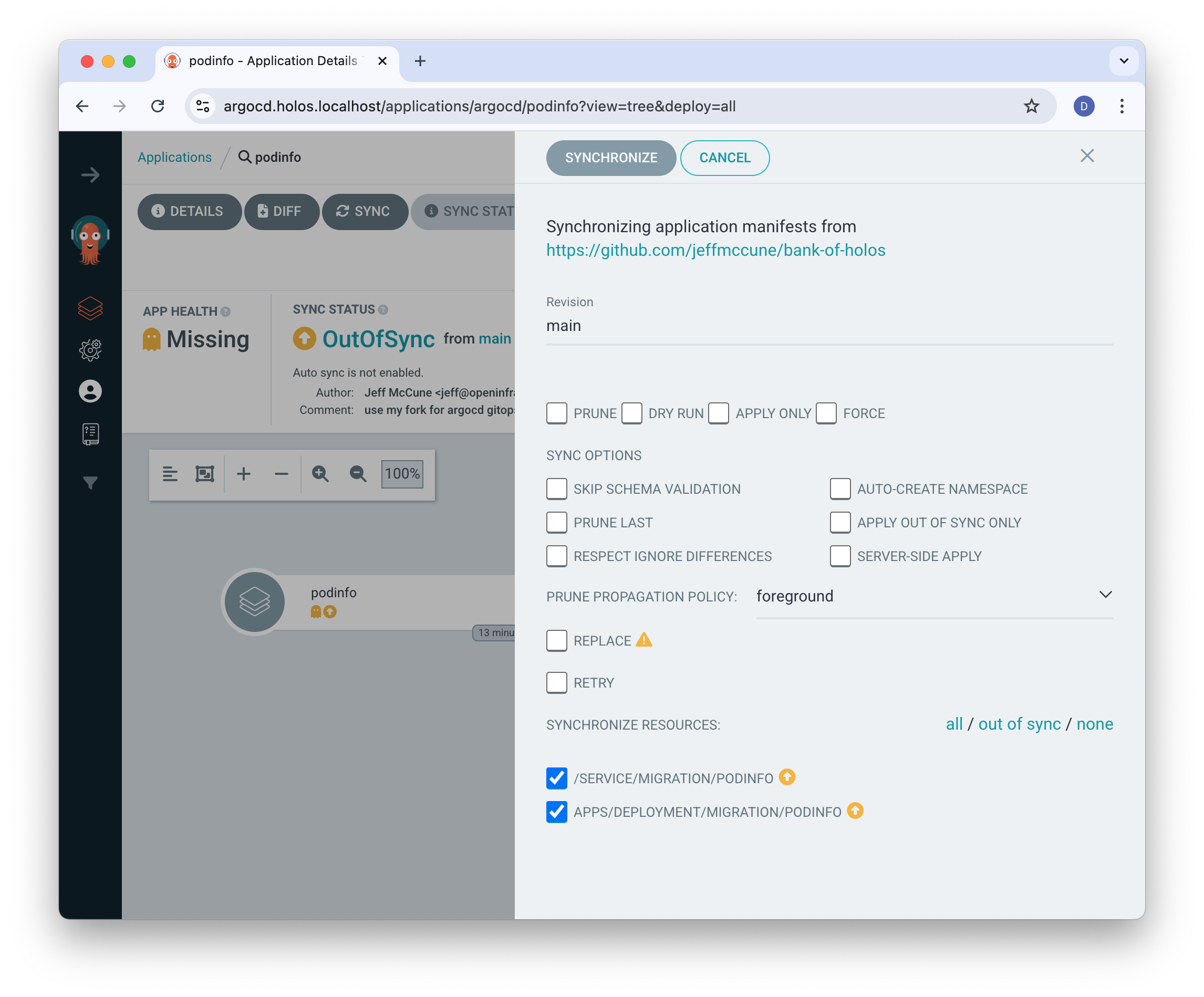

Sync the changes to deploy the service.

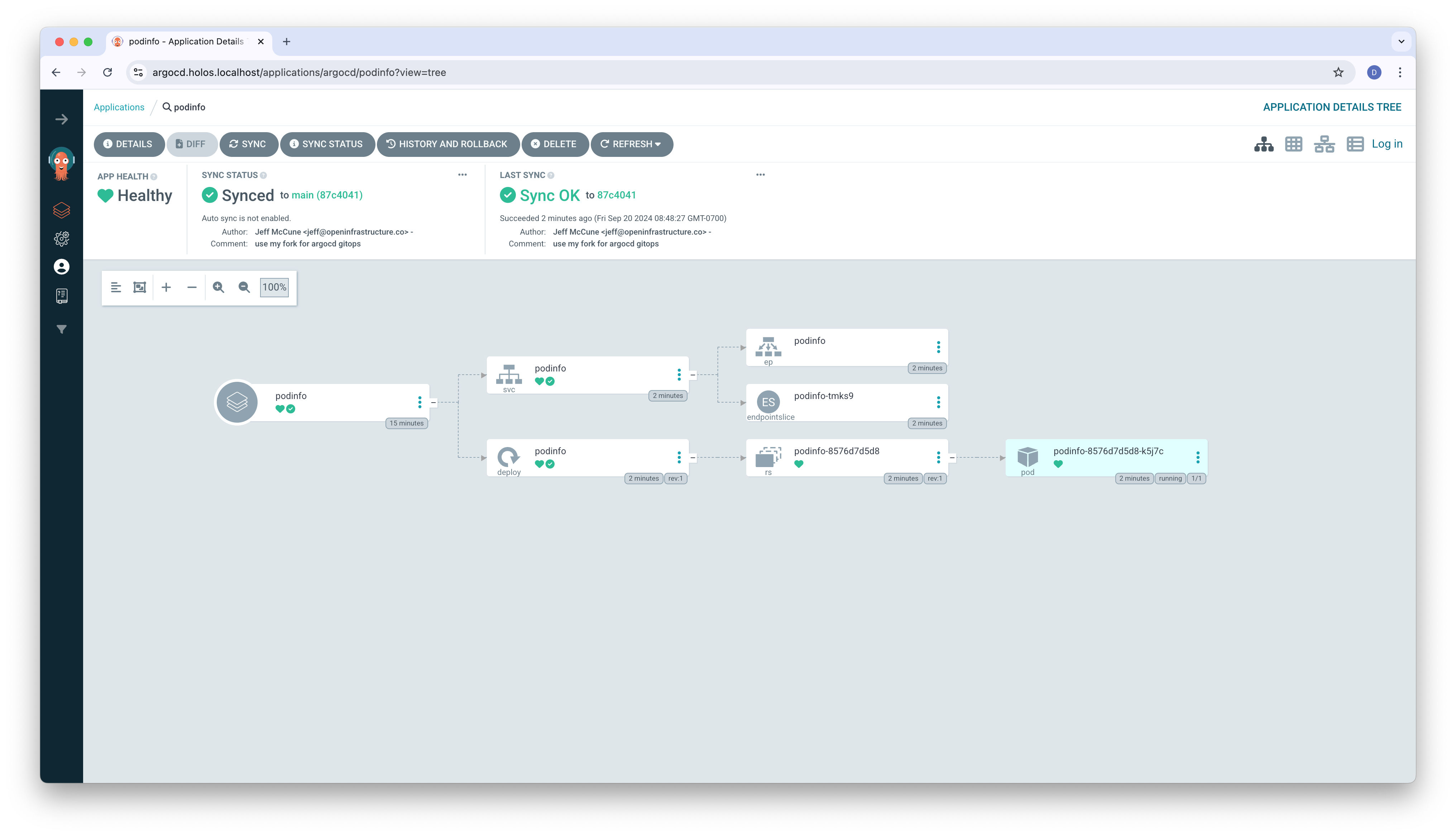

The Deployment and Service become healthy.

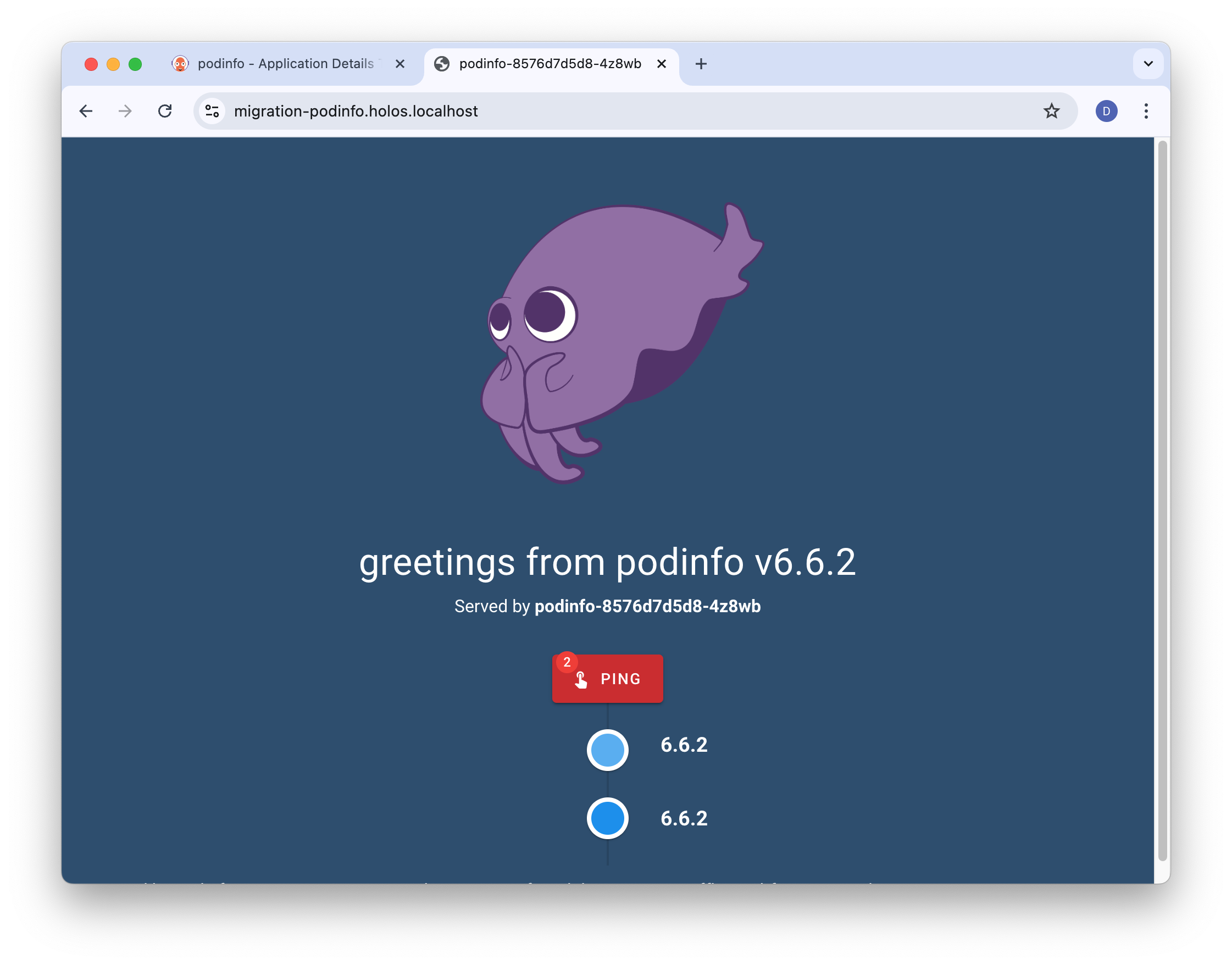

Once deployed, browse to https://migration-podinfo.holos.localhost/ to see the podinfo service migrated onto the Bank of Holos platform.

The migration team has successfully taken the Helm chart from the acquired

company and integrated the podinfo Service into the bank's software

development platform!

Next steps

Now that we've seen how to bring a Helm chart into Holos, let's move on to day 2 platform management tasks. The Change a Service guide walks through a typical configuration change a team needs to roll out after a service has been deployed for some time.